For decades, software has been built like architecture, static and unchanging. Code is written, tested, deployed, and expected to handle what comes next. A function does what it was programmed to do, a database stores what it's told to store, and when requirements change, new code is shipped. This model worked brilliantly for deterministic systems where inputs, outputs, and behaviors could be specified upfront. Like a building, once constructed, it stands fixed in place, reliable precisely because it doesn't move.

But consider a spinning top. Stationary, it falls over immediately, unstable no matter how carefully balanced. Yet set it spinning, and it defies gravity, maintaining equilibrium through continuous motion. The stability isn't in being still, it's in the mathematics of angular momentum, in adaptation rather than rigidity.

When AI entered the world of static systems, it was treated as just another component. Machine learning models became microservices, inference became API calls, and neural networks sat behind REST endpoints. Intelligence was bolted onto existing architectures, wrapping probabilistic systems in deterministic interfaces. The infrastructure remained fundamentally unchanged, just with smarter components added. The spinning top was forced to hold still.

But something fundamental is changing. As generative AI models demonstrate reasoning, planning, and adaptive behavior, the boundary between "system" and "intelligence" dissolves. The old paradigm, stability through stasis, cannot handle systems that must continuously learn, adapt, and evolve. A transformation is occurring where infrastructure itself must become cognitive, must maintain stability not through fixed rules but through mathematical principles governing continuous adaptation.

Recent research reveals this striking transformation [1]. Future systems will integrate generative AI's cognitive capabilities with software engineering principles to create infrastructures that adapt, learn, and govern themselves. This isn't augmentation or enhancement, it's a complete reconception of what systems can be [2]. The infrastructure doesn't just execute intelligence; it embodies it. And like the spinning top, it maintains stability through the very motion that traditional architectures would consider dangerous.

Infrastructure Becomes Cognitive

The shift from traditional to AI-Native architecture represents more than a technological upgrade. It's a fundamental reimagining of how systems operate, evolve, and maintain themselves. Where traditional stacks separate logic from execution and data from behavior, AI-Native architectures weave learning and adaptation into every layer. The result is infrastructure that observes its own performance, adjusts its strategies, and optimizes its operations without human intervention.

The Old Way

Traditional architectures treat intelligence as external. They route requests through fixed rules encoded at design time, scale with load balancers that know nothing about request complexity, and can fail when patterns shift beyond their programmed tolerances. Every decision follows a predetermined path. The system never learns from outcomes, never adapts to changing conditions, never questions its own behavior. It only executes what developers anticipated months or years earlier. When reality deviates from expectation, the system breaks, and humans must intervene to write new rules.

The Cognitive Way

AI-Native systems embed cognition at every layer of the stack. Routers don't follow static rules; they learn which models handle which query types most effectively and adapt their strategies based on observed success patterns [3]. Orchestrators don't scale blindly; they balance performance against system complexity using mathematical stability criteria, adopting new patterns only when provably beneficial [4]. Load balancers become learning agents. Monitoring becomes prediction. The infrastructure itself reasons about its own behavior, maintains models of its performance, and optimizes its decisions through continuous feedback loops. When reality shifts, the system shifts with it.

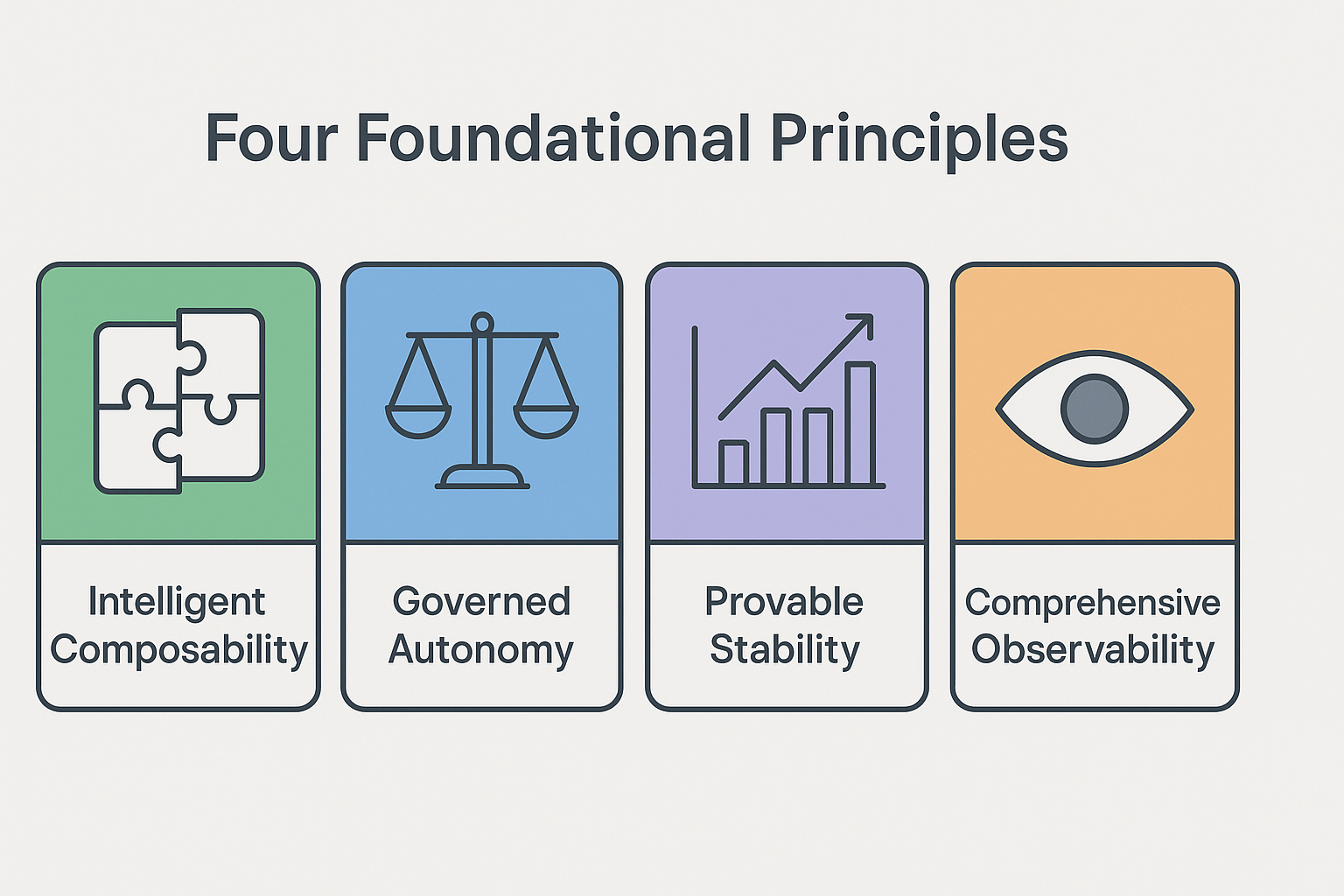

Four Foundational Principles

What distinguishes AI-Native architecture from traditional systems augmented with AI components? Four core principles could guide this new paradigm, each addressing fundamental challenges that emerge when intelligence becomes infrastructure. These aren't implementation details or specific algorithms, but rather architectural considerations that would characterize effective AI-Native systems. Together, they could create systems that are modular yet coordinated, autonomous yet accountable, adaptive yet stable, and powerful yet transparent.

1. Intelligent Composability

AI-Native architectures reject monolithic intelligence in favor of specialized, coordinating components. Rather than deploying a single massive model to handle all tasks, systems decompose capabilities into focused cells or agents, each expert in specific domains [5]. A cell optimized for code generation works alongside another specialized in data analysis, coordinated through intelligent routers that understand task semantics and agent capabilities.

This composability extends beyond simple microservices. Components actively collaborate, share context, and delegate subtasks based on their assessed strengths. The system dynamically assembles the right combination of capabilities for each request, much like how human teams form around problems [6]. When one component fails or performs poorly, others compensate. When new capabilities emerge, they integrate seamlessly into the existing orchestration without requiring architectural rewrites.

This becomes essential as GenAI systems face open-ended queries, shifting user intent, and probabilistic outputs. Traditional systems assume predictable inputs, but AI-Native architectures must handle uncertainty through adaptive routing [13] and dynamic resource allocation [14] to maintain service quality.

The result is infrastructure that scales not through brute force, but through intelligent specialization and coordination.

2. Governed Autonomy

Autonomy without oversight is chaos. As agentic AI systems gain the ability to take actions, invoke tools, and make consequential decisions, governance cannot be an afterthought, it must be infrastructure [7].

AI-Native architectures embed governance throughout the stack. Policy enforcement happens at runtime, not during development. Systems intercept agent outputs, evaluate them against declarative rules, and decide whether to allow, modify, or block actions before they execute. Trust scores track each component's compliance history. When violations occur, containment strategies adapt automatically [8], from increased monitoring to capability restrictions to complete isolation.

Critically, governance operates independently of the governed components. You don't need to modify model weights or prompt engineering to enforce policy. Governance becomes a service layer that wraps autonomous agents, enabling them to operate freely within defined boundaries while preventing harm. This separation allows rapid policy updates without retraining models, and ensures accountability even as system capabilities evolve.

As agent systems handle increasingly critical tasks, from financial decisions to infrastructure control, they need sophisticated coordination mechanisms [15]. Static orchestration breaks down; systems must learn collaboration strategies [16] and adapt to heterogeneous agent capabilities in real time.

3. Provable Stability

How do you know when adding complexity improves a system versus destabilizing it? Traditional software development relies on testing, but AI systems introduce probabilistic behavior that makes exhaustive testing impossible. Mathematical guarantees about system stability as it evolves become essential.

AI-Native architectures apply formal methods from control theory to certify that changes improve rather than degrade system behavior [9]. Before adopting a new capability, routing strategy, or orchestration pattern, the system computes whether the change represents genuine improvement or merely adds complexity without benefit. The framework evaluates trade-offs between performance gains and architectural complexity, accepting changes only when they demonstrably enhance system behavior while maintaining stability [10][11].

Rather than preventing change, focus turn to controlled evolution. Systems grow more sophisticated over time, but along trajectories proven to preserve essential properties like reliability, safety, and predictability. The infrastructure can reason formally about its own evolution, ensuring that today's optimization doesn't become tomorrow's catastrophic failure.

4. Comprehensive Observability

Traditional systems log what developers anticipated would be important. AI-Native systems must instrument everything, because emergent behaviors can arise from unexpected interactions between autonomous components.

Every request, routing decision, tool invocation, and governance action gets recorded with cryptographic signatures for verification and logged for audit [12]. The system maintains a complete trace of how decisions were made, which components participated, what data they accessed, and what outcomes resulted. This creates a continuous stream of observability data that enables real-time monitoring, post-hoc analysis, and anomaly detection.

Transparency serves multiple purposes. It enables debugging of complex multi-agent interactions. It provides accountability when systems make mistakes. It allows regulators and auditors to verify compliance. Most importantly, it enables the system itself to learn from its behavior, detecting patterns in successful operations and identifying failure modes before they cause harm.

When AI makes consequential decisions, "trust me" isn't enough. AI-Native architectures embed auditability from the ground up. Every routing choice, every policy enforcement, and every adaptation cycle becomes traceable [17].

Observability isn't surveillance, it's self-awareness. Systems that understand their own behavior can improve it.

What Comes Next

AI-Native architecture transforms software from artifact to organism. Systems that learn from outcomes, govern their own behavior, and evolve under mathematical constraints represent a new category of infrastructure.

The transition won't happen overnight. Legacy systems will persist for years, traditional architectures will continue serving domains where predictability matters more than adaptability. As AI capabilities expand, as autonomy increases, and as stakes rise, the infrastructure gap between what's needed and what exists widens. Organizations that embrace AI-Native principles now will build systems that scale with AI's evolution.

References

- Vandeputte, F. et al., "Foundational Design Principles and Patterns for Building Robust and Adaptive GenAI-Native Systems," arXiv preprint arXiv:2508.15411, 2025, [Online]

- Liu, S. et al., "Adaptive and Resource-efficient Agentic AI Systems for Mobile and Embedded Devices: A Survey," arXiv preprint arXiv:2510.00078, 2025, [Online]

- Panda, P. et al., "Adaptive LLM Routing under Budget Constraints," arXiv preprint arXiv:2508.21141, 2025, [Online]

- Richards, S.M. et al., "The Lyapunov Neural Network: Adaptive Stability Certification for Safe Learning of Dynamical Systems," arXiv preprint arXiv:1808.00924, 2018, [Online]

- Guo, X. et al., "Towards Generalized Routing: Model and Agent Orchestration for Adaptive and Efficient Inference," arXiv preprint arXiv:2509.07571, 2025, [Online]

- Krishnan, N. et al., "AI Agents: Evolution, Architecture, and Real-World Applications," arXiv preprint arXiv:2503.12687, 2025, [Online]

- Gaurav, S. et al., "Governance-as-a-Service: A Multi-Agent Framework for AI System Compliance and Policy Enforcement," arXiv preprint arXiv:2508.18765, 2025, [Online]

- Wang, C. L. et al., "MI9 - Agent Intelligence Protocol: Runtime Governance for Agentic AI Systems," arXiv preprint arXiv:2508.03858, 2025, [Online]

- Yang, L. et al., "Lyapunov-stable Neural Control for State and Output Feedback: A Novel Formulation," arXiv preprint arXiv:2404.07956, 2024, [Online]

- Nar, K. et al., "Residual Networks: Lyapunov Stability and Convex Decomposition," arXiv preprint arXiv:1803.08203, 2018, [Online]

- Dai, H. et al., "Lyapunov-stable neural-network control," arXiv preprint arXiv:2109.14152, 2021, [Online]

- Raza, S. et al., "TRiSM for Agentic AI: A Review of Trust, Risk, and Security Management in LLM-based Agentic Multi-Agent Systems," arXiv preprint arXiv:2506.04133, 2025, [Online]

- Varangot-Reille, C. et al., "Doing More with Less – Implementing Routing Strategies in Large Language Model-Based Systems: An Extended Survey," arXiv preprint arXiv:2502.00409, 2025, [Online]

- Wang, Y. et al., "Adaptive Orchestration for Large-Scale Inference on Heterogeneous Accelerator Systems: Balancing Cost, Performance, and Resilience," arXiv preprint arXiv:2503.20074, 2025, [Online]

- Zhang, X. et al., "Orchestrating Human-AI Teams: The Manager Agent as a Unifying Research Challenge," arXiv preprint arXiv:2510.02557, 2025, [Online]

- Zhang, Z. et al., "AgentRouter: A Knowledge-Graph-Guided LLM Router for Collaborative Multi-Agent Question Answering," arXiv preprint arXiv:2510.05445, 2025, [Online]

- Tallam, K., "From Autonomous Agents to Integrated Systems, A New Paradigm: Orchestrated Distributed Intelligence," arXiv preprint arXiv:2503.13754, 2025, [Online]

How Temperature Tuning Makes or Breaks Reinforcement Learning

Understanding entropy collapse in maximum entropy reinforcement learning, with insights from both continuous control and language model fine-tuning research

Enterprise AI Triage Systems: Intelligent Automation for Large-Scale Operations

A comprehensive exploration of machine learning-powered triage systems for enterprise operations, featuring drift detection, ensemble methods, and workflow orchestration

Discuss This with Our AI Experts

Have questions about implementing these insights? Schedule a consultation to explore how this applies to your business.