"The human brain provides a compelling blueprint for achieving the effective computational depth that contemporary artificial models lack."

— Hierarchical Reasoning Model Paper

Many current AI systems rely on Chain-of-Thought (CoT) reasoning, which processes information step-by-step in a flat, sequential manner. While effective for simple problems, CoT suffers from brittle task decomposition, requires massive amounts of training data, and hits fundamental limitations when tackling complex, multi-step reasoning problems.

Recognizing these limitations, researchers are exploring hierarchical reasoning architectures that move beyond CoT's linear constraints. These approaches draw inspiration from cognitive science and neuroscience, where reasoning operates across multiple levels of abstraction simultaneously. Rather than processing each step sequentially, hierarchical systems coordinate between high-level strategic planning and low-level detailed execution, similar to how humans naturally decompose complex problems.

Hierarchical Reasoning Model

Building on this foundation, researchers at Sapient Intelligence have developed the Hierarchical Reasoning Model (HRM) that mirrors the brain's hierarchical, multi-timescale processing architecture. Instead of CoT's linear approach, HRM operates like a sophisticated multi-tier fountain system where information flows in carefully orchestrated patterns across different computational timescales.

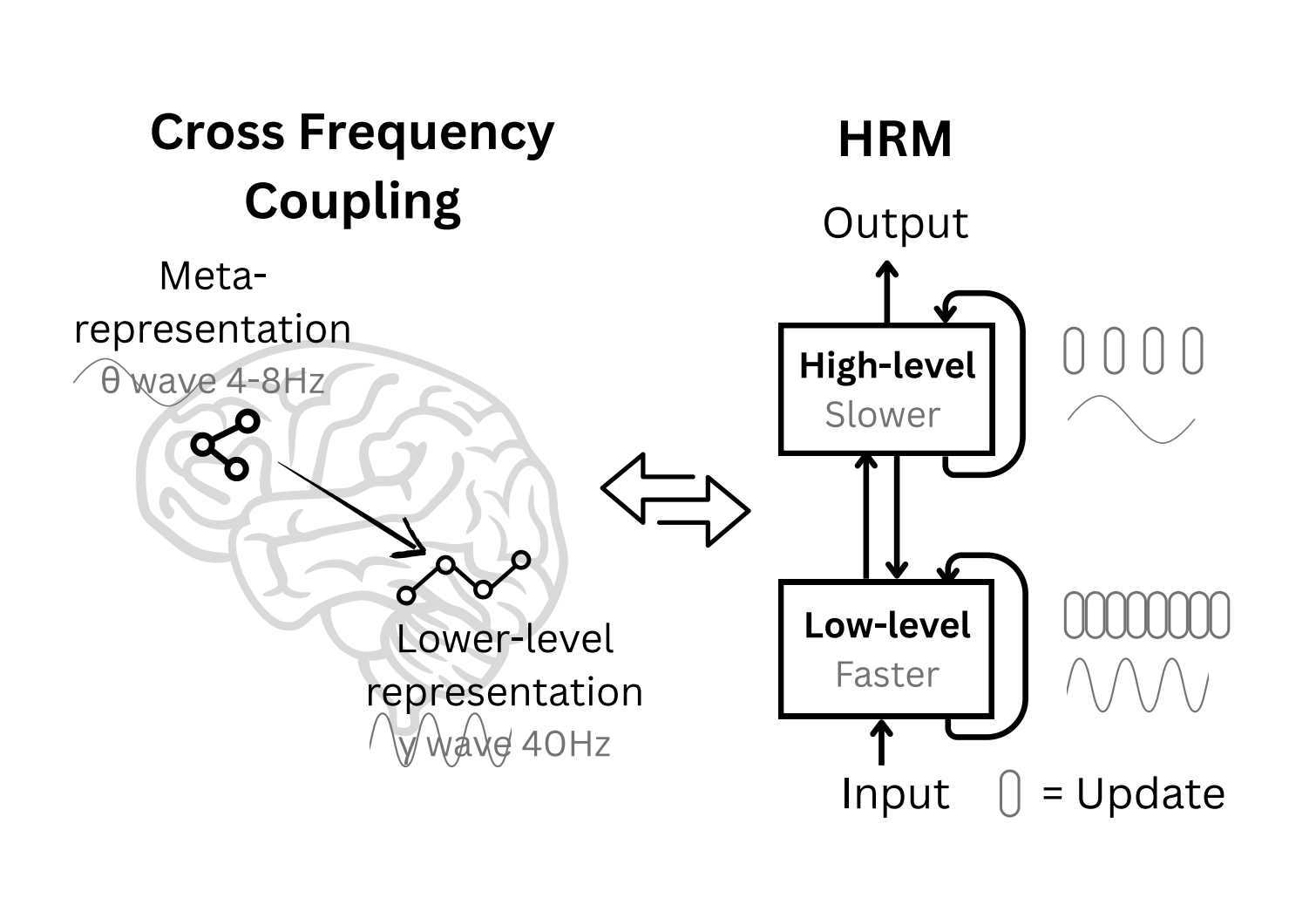

In this multi-tier fountain analogy, water (information) circulates from ground level (detailed processing) to upper levels (abstract reasoning). Then, the water flows back down and is recirculated. More specifically, the system features two interdependent recurrent modules. First, a fast low-level worker that manages rapid, detailed computations. Second, a slower high-level planner that handles abstract reasoning and strategic decomposition. Information cycles between these levels through iterative refinement loops, with the planner providing strategic guidance while the worker delivers detailed computational results that inform higher-level updates.

This brain-inspired design creates what the researchers call hierarchical convergence, where the worker module reaches local equilibrium within each computational cycle, then gets reset by updated guidance from the planner module, enabling the system to perform sequences of distinct, stable computations rather than converging prematurely like traditional recurrent networks.

Remarkable Efficiency and Performance

This hierarchical architecture yields extraordinary results with minimal resources. With only 27 million parameters and using just 1,000 training samples, HRM achieves strong performance on complex reasoning tasks [1]. To put this in perspective, most advanced large language models require billions or even trillions of parameters and vast training datasets to achieve comparable results.

Benchmark Strengths

HRM demonstrates excellent results on challenging tasks including complex Sudoku puzzles and optimal path finding in large mazes. Most significantly, it outperforms much larger models with significantly longer context windows on the Abstraction and Reasoning Corpus (ARC) [1], a key benchmark for measuring artificial general intelligence capabilities.

Current Limitations

While humans achieve 76.2% accuracy on ARC training tasks [4], HRM achieves approximately 40% on ARC benchmarks [1]. Though still below human capabilities, this represents meaningful advancement compared to traditional AI approaches that typically manage only 31%, demonstrating significant progress in closing the reasoning gap.

These performance gains stem directly from HRM's hierarchical architecture, which efficiently specializes each computational tier for its optimal processing timescale rather than requiring massive networks to handle both abstract and concrete reasoning simultaneously.

The Science Behind Multi-Timescale Intelligence

Understanding why hierarchical reasoning works requires examining how the brain processes information across different temporal scales. Research into neural language models reveals fascinating parallels with brain organization, where studies mapping the processing timescales of individual units show that long timescales are assigned to units tracking long-range dependencies [5]. This mirrors how the human brain processes language and reasoning across different temporal hierarchies.

Source: Hierarchical Reasoning Model Paper

HRM directly translates this biological architecture into computational form. The high-level planner module operates like the brain's slow theta rhythms, which are associated with working memory and strategic planning, maintaining abstract problem representations and overall reasoning strategies. Meanwhile, the low-level worker module mirrors fast gamma processing, which is linked to focused attention and detailed computation, rapidly executing specific computational steps within each planning cycle.

The bi-directional communication between modules, shown by arrows in the diagram, enables the flow of information. The planner provides strategic guidance and problem decomposition while the worker delivers detailed results that flow back up to inform strategic updates, creating an efficient reasoning loop that mirrors human cognitive processing across multiple timescales.

Foundational Evidence for Hierarchical Approaches

The broader success of hierarchical processing has been emerging across multiple research directions, providing strong validation for approaches like HRM. Two compelling examples from recent work demonstrate how hierarchical thinking was already proving its value in advancing AI reasoning capabilities, setting the theoretical and practical foundation for breakthrough systems like HRM.

Multi-Step Reward Models

Research on hierarchical multi-step reward models demonstrates that evaluating reasoning at both fine-grained (detailed steps) and coarse-grained (strategic decisions) scales significantly improves coherence [6]. This dual-level evaluation mirrors exactly what HRM's planner-worker design achieves naturally.

This approach excels particularly when flawed reasoning steps are later corrected through self-reflection, much like how humans review their work at both detailed and strategic levels to catch errors that single-level analysis would miss.

ReasonFlux Systems

ReasonFlux systems that use hierarchical thought templates to optimize reasoning search spaces have shown remarkable success, even outperforming powerful models like OpenAI's o1-preview [7]. These systems create libraries of high-level reasoning patterns that generalize across similar problem types.

This success demonstrates the practical value of hierarchical approaches, where structured template libraries containing hundreds of high-level thought templates provide strategic guidance similar to how HRM's planner module develops reasoning strategies for different problem categories.

Together, these advances demonstrate that hierarchical processing represents a proven and promising frontier for AI research, moving beyond biologically inspired theory toward practical breakthroughs in artificial reasoning capabilities that paved the way for systems like HRM.

Implementation and Technical Innovation

Beyond its architectural insights, HRM introduces several technical innovations that make hierarchical reasoning practically feasible. The system employs a novel one-step gradient approximation that eliminates the memory-intensive backpropagation through time typically required for recurrent networks, reducing memory complexity from O(T) to O(1) while maintaining training effectiveness.

The model also incorporates adaptive computational time (ACT), allowing it to dynamically determine how much processing each problem requires by spending minimal resources on simple tasks while allocating extensive computation to complex reasoning challenges. This brain-inspired "thinking, fast and slow" mechanism enables efficient resource utilization and better performance scaling.

During training, HRM uses deep supervision at multiple computational segments, providing frequent feedback that helps stabilize the learning process and improves convergence. These technical advances work together to make hierarchical reasoning both computationally efficient and practically trainable at scale.

Implications for Future AI Development

The success of brain-inspired hierarchical reasoning models like HRM represents more than improved benchmark scores, as it suggests an evolving approach in how we develop artificial intelligence systems. These systems demonstrate that intelligence emerges from the efficiency of skill acquisition on unknown tasks [8], rather than simply memorizing vast amounts of training data.

This paradigm shift has several important implications for AI development. First, it suggests that smaller, more efficiently designed models can compete with massive parameter-heavy systems when architected correctly around hierarchical principles. Second, it validates the value of drawing inspiration from biological intelligence rather than pursuing purely computational scaling approaches.

HRM's performance on challenging reasoning benchmarks suggests meaningful progress toward more human-like cognitive capabilities, though significant work remains in fully bridging the human-AI reasoning gap. The architecture's ability to achieve strong results with minimal training data also points toward more sustainable and accessible AI development.

By combining the brain's multi-timescale processing principles with modern computational capabilities, systems like HRM represent an emerging convergence of biological inspiration and computational innovation. This fountain-like flow of information across hierarchical processing levels suggests promising trends for artificial intelligence development, potentially advancing toward more capable reasoning systems that incorporate human-like cognitive patterns while preserving the computational advantages of digital architectures.

References

- Wang, Guan et al., "Hierarchical Reasoning Model," ArXiv, 2025, [Online]

- Rudelt, Lucas et al., "Signatures of hierarchical temporal processing in the mouse visual system," ArXiv, 2023, [Online]

- Goldstein, Ariel et al., "The Temporal Structure of Language Processing in the Human Brain Corresponds to The Layered Hierarchy of Deep Language Models," ArXiv, 2023, [Online]

- LeGris, Solim et al., "H-ARC: A Robust Estimate of Human Performance on the Abstraction and Reasoning Corpus Benchmark," ArXiv, 2024, [Online]

- Chien, Hsiang-Yun et al., "Mapping the Timescale Organization of Neural Language Models," ArXiv, 2020, [Online]

- Wang, Teng et al., "Towards Hierarchical Multi-Step Reward Models for Enhanced Reasoning in Large Language Models," ArXiv, 2025, [Online]

- Yang, Ling et al., "ReasonFlux: Hierarchical LLM Reasoning via Scaling Thought Templates," ArXiv, 2025, [Online]

- Chollet, François, "On the Measure of Intelligence," ArXiv, 2019, [Online]

Spiking Neural Networks for Energy-Efficient AI

AI approach that achieves efficiency and speed by mimicking biological neural networks

The Smart Enterprise AI Stack: Why Teams of AI Agents Beat Solo Models Consistently

Enterprise AI is evolving through compound systems that stack specialized agents, orchestration protocols, and data integration tiers to deliver scalable intelligence that single models simply can't match

Discuss This with Our AI Experts

Have questions about implementing these insights? Schedule a consultation to explore how this applies to your business.