Spiking Neural Networks for Energy-Efficient AI

Imagine if AI could think as efficiently as your brain, using no more power than a reading lamp while processing complex information? While today's artificial intelligence systems consume massive amounts of electricity running continuously, an alternative approach called spiking neural networks (SNN) operates more like biological brains. Activating only when needed and processing information through precise timing patterns. These networks achieve dramatic energy savings while maintaining competitive performance. Recent advances show they can scale to billions of parameters, process million-token sequences with remarkable speed, and require significantly less training data than conventional models.

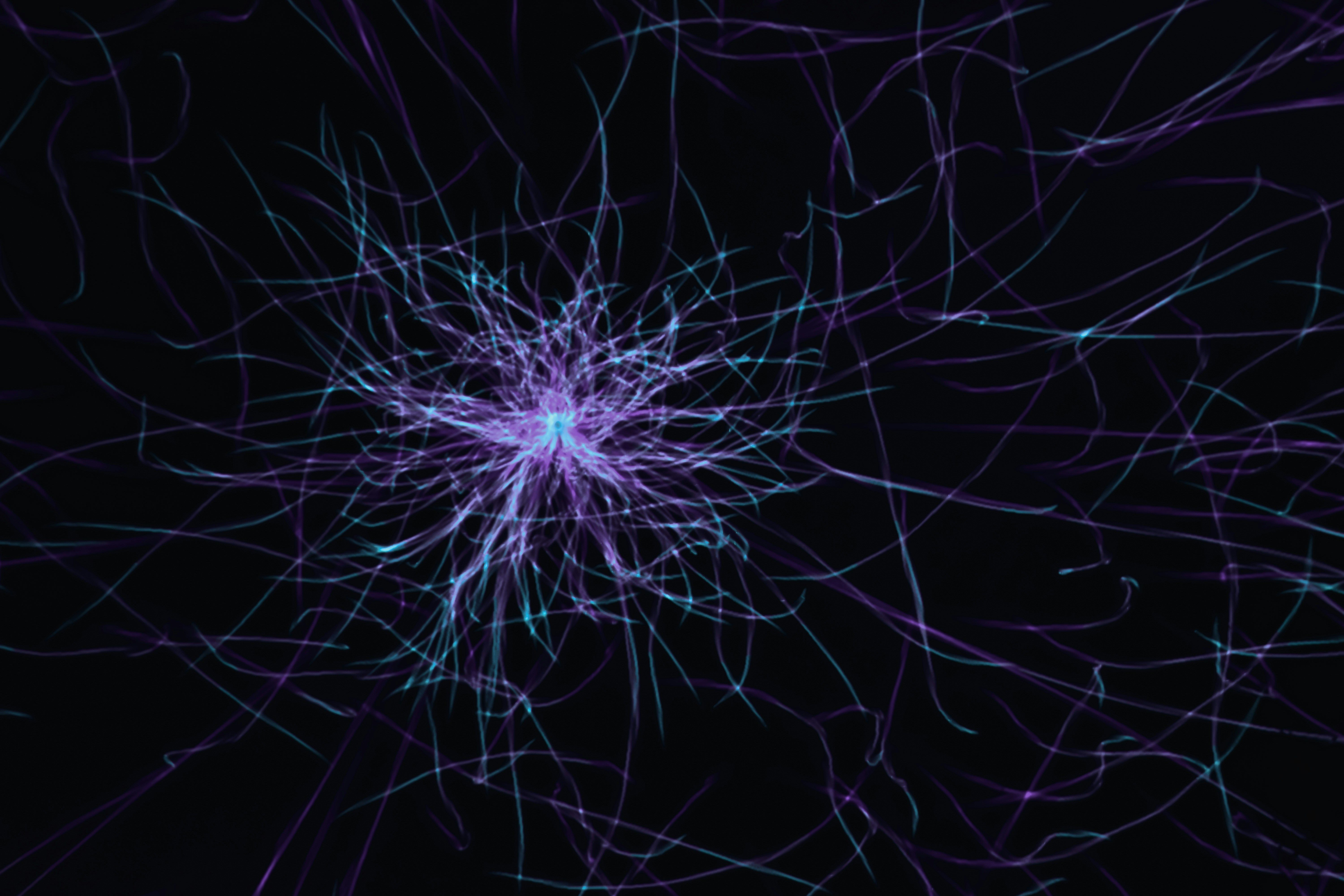

When a person reads a word like fire, specific neurons activate in millisecond bursts. Some handle visual recognition. Others process meaning. Still others create emotional associations. Yet the vast majority of 86 billion neurons remain quiet, conserving energy. Only the precise neural circuits needed for that moment spring to life. Such selective activation creates sparse, highly efficient patterns of activity. Spiking neural networks mimic this biological efficiency in artificial intelligence. They activate computational units only when specific conditions are met rather than running continuously like traditional AI systems.

Architecture That Breaks Traditional Rules

Spiking neural networks represent a fundamental shift from conventional artificial neural networks by processing information through discrete spike events rather than continuous activation values. Traditional networks resemble a factory assembly line where every station processes every item that passes through. In contrast, spiking networks function more like a city's electrical grid where individual components only activate and consume power when needed. Such selective activation creates natural efficiency.

Such selective activation creates inherent sparsity. Typically only 5-30% of neurons are active at any given moment. This sparse pattern differs markedly from dense networks where all neurons process every input. The approach resembles the difference between streetlights that illuminate only when motion is detected versus those that remain constantly lit.

The core innovation lies in temporal dynamics. These refer to how these systems process information across time. While conventional networks process information in a single forward pass, spiking networks integrate information over time through membrane potential dynamics. Each neuron functions like a rain barrel. It accumulates incoming spike signals (raindrops) and only fires (overflows) when a threshold is reached. The membrane potential mechanism creates natural temporal processing capabilities that can capture complex time-dependent patterns without requiring additional recurrent structures. These are specialized components typically needed in traditional networks to handle time-based information.

These architectural innovations enable enhanced performance through their inherent sparse activation patterns. Only the neural circuits needed for each specific computation become active. Such selective activation dramatically reduces energy consumption while maintaining processing capability. Recent advances have explored various optimization strategies for spiking networks. These include specialized attention mechanisms designed for the unique properties of spike-based computation and novel training methods that leverage the temporal dynamics of neuronal firing patterns.

Neuromorphic Computing and Nature's Energy Solution

The significant advancement in spiking neural networks stems not merely from their structure, but from their core method of energy use. These systems mirror the brain's targeted activation patterns. They deliver efficiency improvements that could reshape AI computing infrastructure.

The efficiency advantage becomes more pronounced when spiking neural networks operate on specialized neuromorphic chips. Such hardware mimics biological neuron function directly. Traditional processors continuously cycle through calculations. Neuromorphic chips activate only when individual neurons actually spike. This resembles a neighborhood where houses only draw power when residents are home. Companies such as Intel with their Loihi chips and IBM with TrueNorth have shown that this event-driven hardware can process spiking neural networks using thousands of times less energy than conventional GPUs. They approach the brain's efficient 20-watt power consumption.

Studies consistently show that spiking neural networks achieve 6 to 8 times greater efficiency than conventional networks as shown by recent analytical studies [2]. Some implementations demonstrate 280 times lower energy consumption on neuromorphic chips demonstrated in object detection applications [3]. Rather than traditional AI that continuously consumes energy like a city with all lights blazing, neuromorphic computing functions like that selective neighborhood where only occupied houses use electricity. Such event-driven processing creates substantial reductions in idle power consumption through specialized learning algorithms [4].

The selective activation principle extends throughout the entire system architecture. Just as a neighborhood conserves energy by lighting only active areas, neuromorphic systems process information only when and where needed. Such selective processing creates cascading efficiency gains that multiply across the entire computing infrastructure.

Advanced Training and Biological Principles

⚡ Training Innovation

Modern spiking networks use continual pre-training with significantly less training data while achieving comparable performance to mainstream models.

Training spiking neural networks presents unique challenges because traditional learning methods rely on smooth, continuous signals that can be easily adjusted through mathematical gradients. However, spikes are binary events. Neurons either fire or they don't. Such binary behavior creates sharp, discontinuous signals that standard training algorithms can't handle directly. To solve the gradient problem, researchers developed clever workarounds called surrogate gradient functions. These essentially create smooth approximations during the learning phase while preserving the sharp spiking behavior during actual operation (using specialized mathematical techniques) [5].

Even more fascinating is how these networks learn through biological principles. The architecture incorporates Spike-Timing-Dependent Plasticity (STDP). STDP is a learning mechanism discovered in real neurons where the precise timing between spikes determines whether connections strengthen or weaken. Like a conversation, if one person consistently speaks right after another, their conversational connection strengthens. Similarly, if neuron A consistently fires just before neuron B, their connection grows stronger. STDP creates local, event-driven learning that happens right at the connections between neurons. It doesn't require global coordination across the entire network (through consciousness-driven mechanisms) [6].

Research has uncovered a phenomenon called Temporal Information Concentration where the network gradually learns to process the most important information in the earliest moments of computation (as explored in recent temporal dynamics studies) [7]. The phenomenon mirrors a fundamental mechanism in biological brains. When a person hears a sudden loud noise, the brain immediately processes whether it's dangerous before analyzing whether it's a car backfiring or thunder. During training, spiking networks naturally develop this same priority system. They learn to concentrate critical decision-making information in the first few milliseconds of processing while relegating detailed analysis to later timesteps. Such temporal organization makes the networks both more efficient and more robust. They can make quick decisions when needed while still maintaining the capacity for deeper analysis.

Performance and Future Implications

🏆 Key Achievement

State-of-the-art implementations achieve >100× speedup in Time to First Token for multi-million token sequences while maintaining competitive language modeling performance.

Advanced spiking neural networks successfully process sequences up to millions of tokens with dramatic speed improvements. Recent SpikingBrain research demonstrates that spiking neural networks can now scale to billions of parameters while achieving over 100× speedup for million-token sequences. They require only 2% of typical training data [1]. The integration of linear complexity attention, sparse activation patterns, and biological learning mechanisms positions these models as critical stepping stones toward next-generation neuromorphic chips (through transformer-inspired architectures) [8].

The future promises even tighter integration between spiking neural networks and neuromorphic hardware. As SNNs become more sophisticated, they're driving demand for specialized chips that can fully exploit their event-driven nature. Meanwhile, advances in neuromorphic chip design are enabling larger, more complex spiking networks that were previously impossible to implement efficiently. Such symbiotic development could lead to AI systems that combine the processing power of today's large language models with the energy efficiency of biological brains. The integration potentially enables powerful AI to run on smartphones, embedded devices, and edge computing systems where power constraints currently make advanced AI impractical.

The spiking neural network approach suggests a future where artificial intelligence operates more like biological brains. Such systems efficiently process information through sparse, event-driven computation rather than continuous energy-intensive operations.

References

- Y. Pan, Y. Feng, J. Zhuang, S. Ding, Z. Liu, B. Sun, Y. Chou, H. Xu, X. Qiu, A. Deng, A. Hu, P. Zhou, M. Yao, J. Wu, J. Yang, G. Sun, B. Xu, and G. Li, "SpikingBrain Technical Report. Spiking Brain-inspired Large Models," arXiv preprint arXiv:2509.05276, 2024. [Online]

- E. Lemaire, L. Cordone, A. Castagnetti, P.-E. Novac, J. Courtois, and B. Miramond, "An Analytical Estimation of Spiking Neural Networks Energy Efficiency," arXiv preprint arXiv:2210.13107, 2022. [Online]

- S. Kim, S. Park, B. Na, and S. Yoon, "Spiking-YOLO. Spiking Neural Network for Energy-Efficient Object Detection," arXiv preprint arXiv:1903.06530, 2019. [Online]

- W. Wei, M. Zhang, J. Zhang, A. Belatreche, J. Wu, Z. Xu, X. Qiu, H. Chen, Y. Yang, and H. Li, "Event-Driven Learning for Spiking Neural Networks," arXiv preprint arXiv:2403.00270, 2024. [Online]

- E. O. Neftci, H. Mostafa, and F. Zenke, "Surrogate Gradient Learning in Spiking Neural Networks. Bringing the Power of Gradient-based optimization to spiking neural networks," IEEE Signal Processing Magazine, vol. 36, no. 6, pp. 51-63, 2019. [Online]

- S. Yadav, S. Chaudhary, and R. Kumar, "Consciousness Driven Spike Timing Dependent Plasticity," arXiv preprint arXiv:2405.04546, 2024. [Online]

- Y. Kim, Y. Li, H. Park, Y. Venkatesha, A. Hambitzer, and P. Panda, "Exploring Temporal Information Dynamics in Spiking Neural Networks," arXiv preprint arXiv:2211.14406, 2022. [Online]

- M. Yao, J. Hu, T. Hu, Y. Xu, Z. Zhou, Y. Tian, B. Xu, and G. Li, "Spike-driven Transformer V2. Meta Spiking Neural Network Architecture Inspiring the Design of Next-generation Neuromorphic Chips," arXiv preprint arXiv:2404.03663, 2024. [Online]

A Multi-Tier Safety Architecture for Critical Applications

Explore how tiered AI architectures can help ensure safety and compliance in healthcare, finance, and other high-stakes domains.

Hierarchical Reasoning in Artificial Intelligence

Exploring how hierarchical reasoning models inspired by the human brain's multi-timescale processing are revolutionizing AI performance on complex reasoning tasks

Discuss This with Our AI Experts

Have questions about implementing these insights? Schedule a consultation to explore how this applies to your business.