The AI That Rewrites Itself

MIT's Revolutionary SEAL Framework Teaches Language Models to Self-Adapt

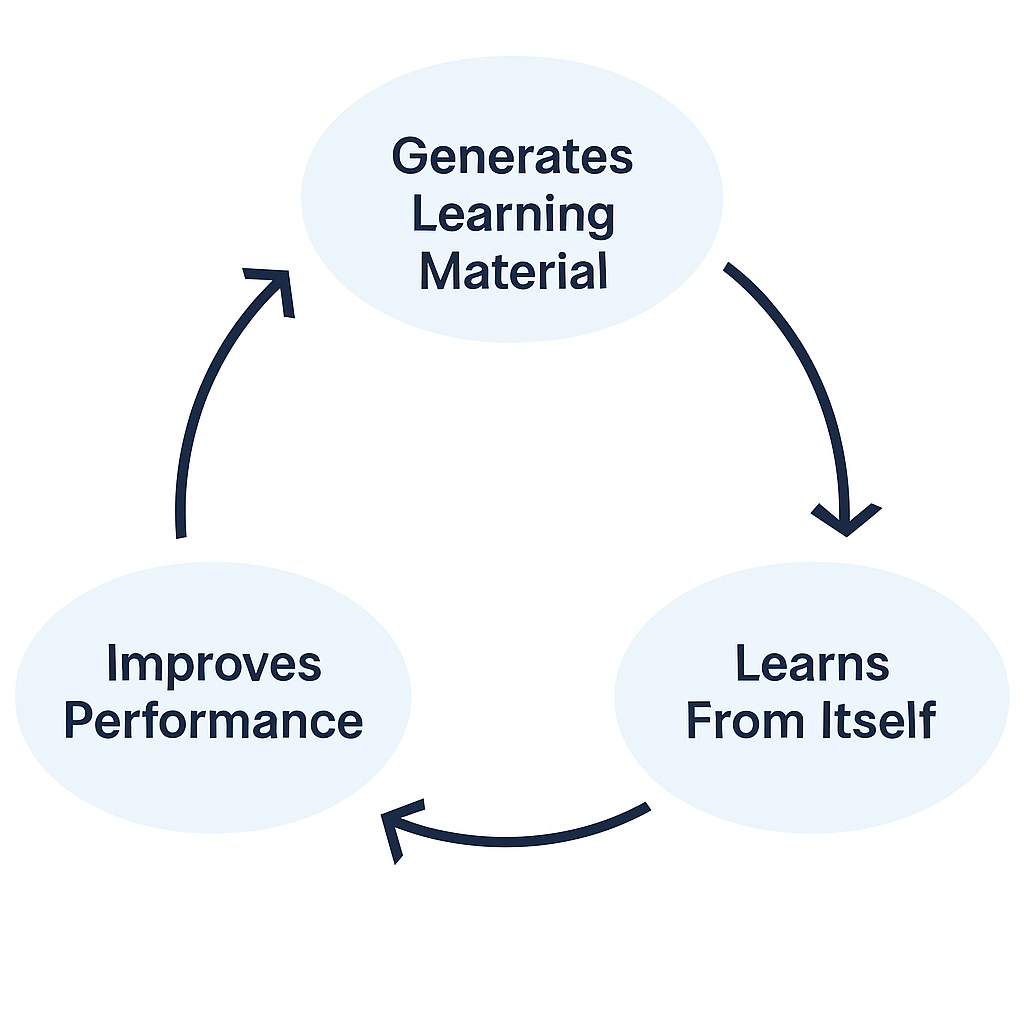

Imagine an AI that doesn't just learn from data you give it, but actually generates its own training material and figures out how to improve itself [1]. MIT researchers have made this possible with Self-Adapting Language Models (SEAL) that rewrite and improve themselves. [1].

The Student Analogy That Changes Everything

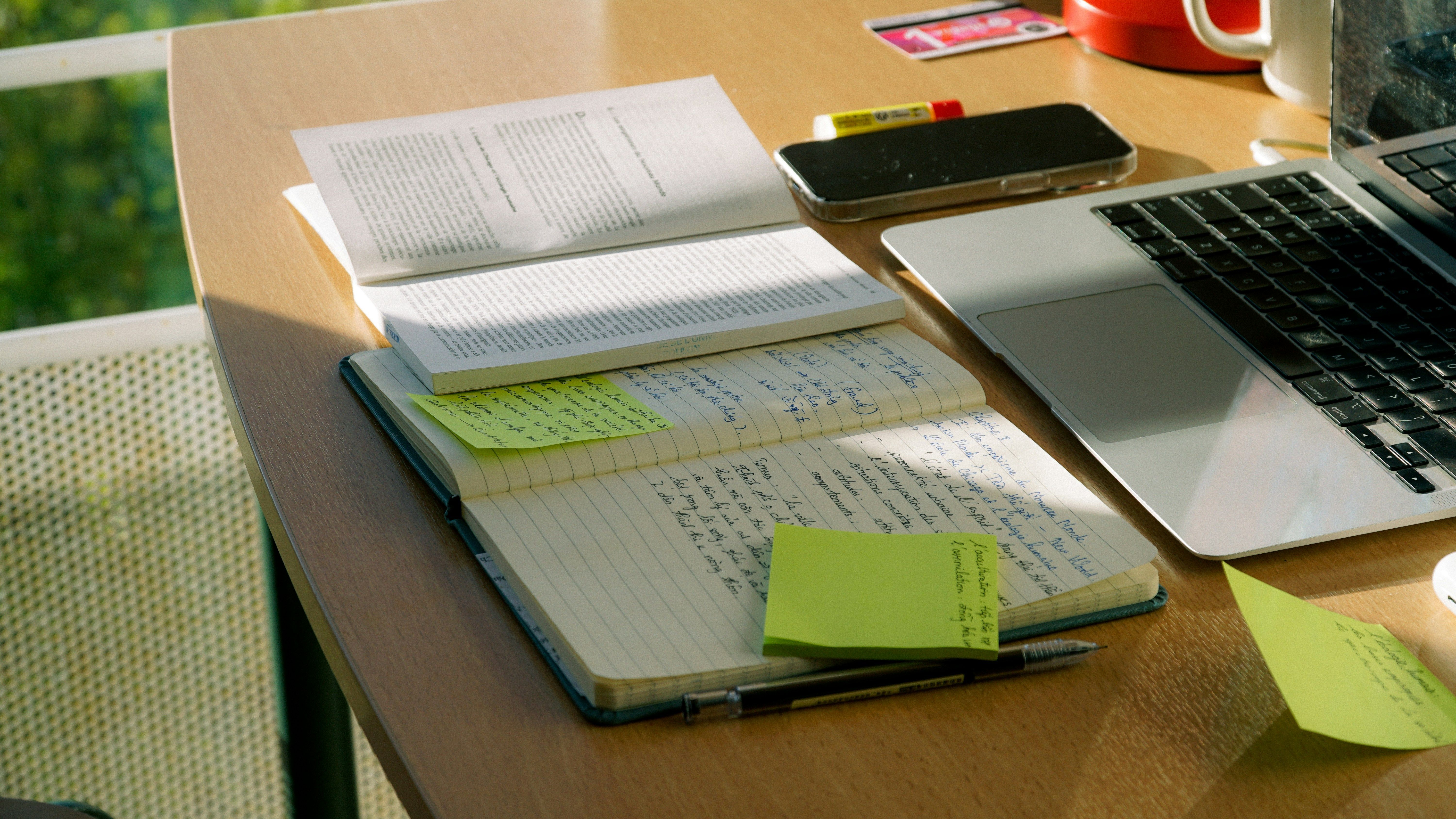

Think about how humans learn. When you're studying for an exam, you don't just read the textbook over and over. You take notes, create summaries, and perhaps draw diagrams. These actions are transforming the raw information into a format that works better for your brain.

That is exactly what SEAL does for AI [1]. Instead of just training on data "as-is," these models generate what researchers call "self-edits". Essentially rewriting information in ways that make it easier for them to learn.

The SEAL Difference

Unlike traditional models that passively consume training data, SEAL actively generates and curates its own learning material; creating a feedback loop of continuous improvement.

The Numbers Don't Lie

The results are impressive. In knowledge incorporation tasks, SEAL improved question-answering accuracy from 33.5% to 47.0% on SQuAD questions without any context, and outperformed synthetic data generated by GPT-4.1 [1].

For few-shot learning on reasoning tasks, SEAL achieved a 72.5% success rate compared to just 20% for models using self-edits without training, and 0% for standard in-context learning [3].

Why This Matters for 2025 and Beyond

This breakthrough fits perfectly into the biggest AI trends of 2025 [4]. We're moving away from static, one-size-fits-all models toward AI that can adapt, specialize, and continuously improve. Industry experts predict that AI agents will handle increasingly complex tasks with greater autonomy [4].

The Future Is Adaptive

SEAL represents a fundamental shift toward AI that doesn't just process information but actively reshapes it for optimal learning, potentially solving the "data wall" problem as we run out of human-generated content.

The Road Ahead

Of course, SEAL isn't perfect just yet. The researchers acknowledge challenges with catastrophic forgetting, where learning new concepts makes the model forget old concepts [1]. That is exactly the kind of problem that makes this research so exciting. The real breakthrough isn’t just smarter AI, but AI that evolves by improving the way it learns.

As MIT Technology Review notes in their analysis of 2025 AI trends, "Having an AI tool that can operate in a similar way to a scientist is one of the fantasies of the tech sector" [2]. We might be looking at the first real step toward AI that truly improves itself, not just follows instructions.

The future isn't just about bigger models, it's about smarter ones. Stanford’s latest AI Index reports a record $109 billion in U.S. private AI investment in 2024, mostly aimed at next-generation capabilities [3]. SEAL might just be showing us the way there.

References

- Zweiger, Adam et al., "Self-Adapting Language Models," ArXiv, 2025, [Online]

- "What's next for AI in 2025," MIT Technology Review, January 2025, [Online]

- "AI Index 2025: State of AI in 10 Charts," Stanford Institute for Human-Centered AI, 2025, [Online]

- "6 AI trends you'll see more of in 2025," Microsoft News, 2025, [Online]

Revolutionary Advancements in Mixture of Experts (MoE) Architectures

Discover how MoE models are transforming AI efficiency and scalability through innovative routing mechanisms and specialized expert networks

AI Speech Translation: Breaking Down Language Barriers

How new AI technology is revolutionizing real-time speech translation, making conversations across languages as natural as talking to your neighbor.

Discuss This with Our AI Experts

Have questions about implementing these insights? Schedule a consultation to explore how this applies to your business.