Revolutionary Advancements in MoE Architectures

How Mixture of Experts Models (MoE) Are Redefining AI Efficiency and Scale

Imagine an AI system that functions as a specialized team of experts, in which each expert handles what they do best instead of all experts trying to solve all problems. This is the revolutionary concept behind MoE architectures, one of the most significant breakthroughs in making artificial intelligence both more powerful and more efficient.

Traditional AI models resemble having one person attempt to be a doctor, lawyer, engineer, and chef simultaneously. MoE models, by contrast, employ a smart dispatcher who knows exactly which specialist to call for each specific task. This approach delivers remarkable performance improvements while using far less computational power, achieving better results while requiring significantly less processing capacity.

Understanding How MoE Systems Work

At its core, the MoE system contains multiple specialized neural networks (the "experts") and a gating mechanism that decides which experts should handle each incoming piece of information. Think of it as having a team of specialists where a smart coordinator instantly determines whether a question needs a mathematician, a linguist, or a scientist to provide the best answer.

The key breakthrough is that instead of activating the entire massive model for every task, MoE systems only wake up the specific experts needed for each problem. This selective activation means you can have the knowledge capacity of a giant model while only using the computational power of a much smaller one.

Traditional AI models process every input through all their components, which is efficient for the model but wasteful in terms of computation. MoE models, by contrast, route different types of inputs to different specialized pathways, ensuring that computational resources are used only where they provide the most value.

Recent Advances in MoE Design

Recent research has dramatically improved MoE systems through several key innovations. Google's Expert Choice routing [1] reversed the traditional approach, letting experts choose which problems to solve rather than having problems compete for expert attention. This change alone improved training speed by more than 2x while achieving better performance.

DeepSeek's approach [2] introduced ultra-fine specialization, creating many micro-specialists that can be combined in flexible ways, along with shared experts that handle common knowledge. Meanwhile, hierarchical architectures such as the Union of Experts [4] organize experts into multiple levels of decision-making, achieving 76% computational savings.

These advances have enabled models such as DeepSeek-V3 to reach 671 billion parameters while only activating 37 billion at a time [3], demonstrating that MoE architectures can scale to unprecedented sizes while maintaining remarkable efficiency.

Real-World Impact: From Understanding to Action

MoE architectures excel in applications requiring multiple types of processing simultaneously. By allowing AI systems to develop specialized expertise for different sensory inputs and tasks, MoE enables seamless integration of visual processing, language understanding, and physical control. Recent breakthroughs show how this approach creates AI systems that can effectively see, hear, speak, and act in the world.

Multi-Sensory AI Understanding: The Uni3D-MoE framework demonstrates how AI systems can process different types of sensory information, combining visual, auditory, and tactile data through specialized expert networks [5]. Consider an AI that employs separate specialists for processing visual information, spatial relationships, and tactile feedback, all working together to understand complex environments.

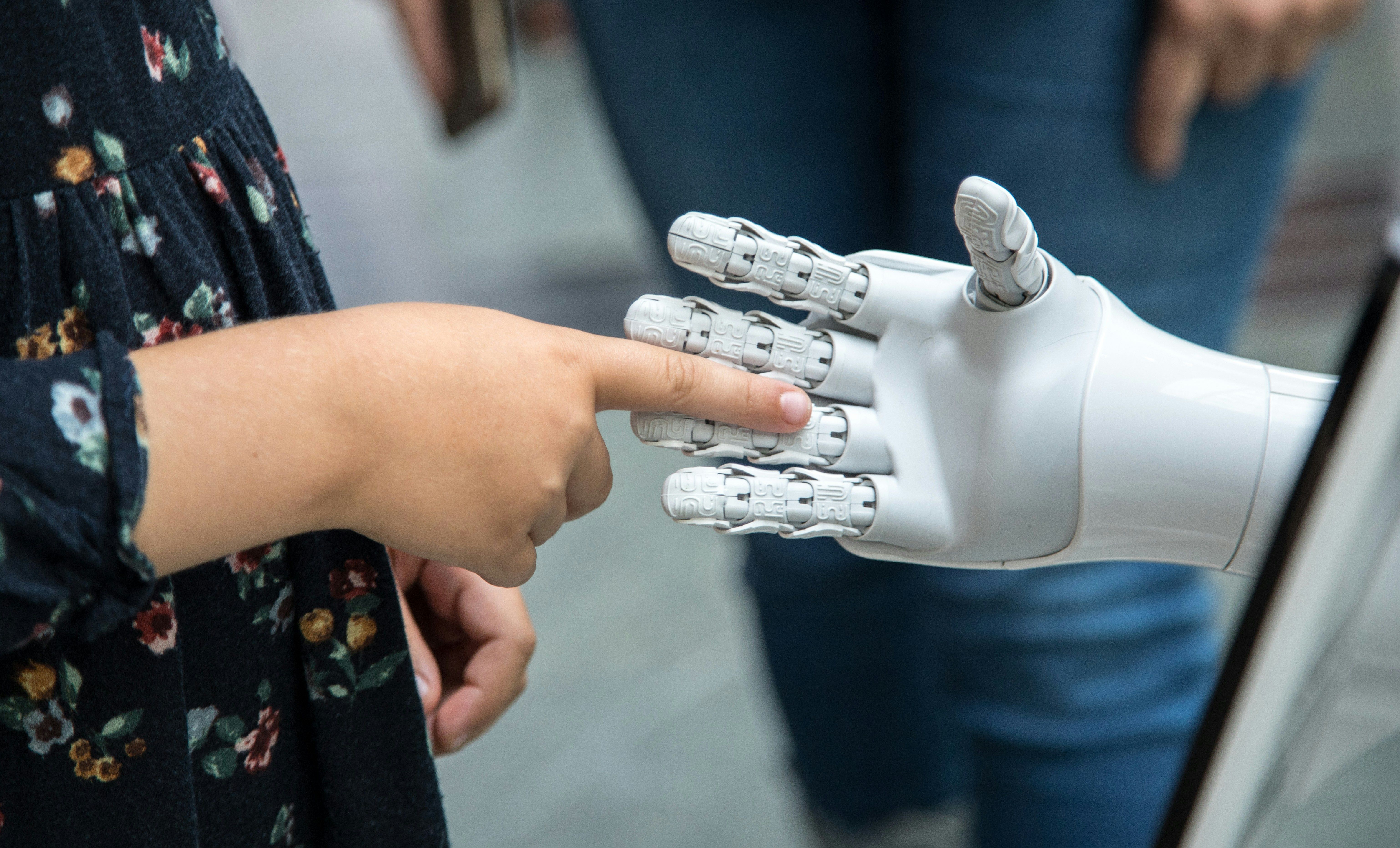

Intelligent Robot Systems: ChatVLA introduces systems where robots use different expert networks for understanding human communication versus performing physical tasks [6]. This resembles having one part of the robot's processing focused on conversation and another part dedicated to precise hand movements, preventing the common problem where robots that excel at communication struggle with physical coordination.

Natural Human-Robot Interaction: Research demonstrates how MoE systems can simultaneously process speech, gestures, eye movements, and physiological signals through specialized expert networks [7]. This enables robots to interact more naturally by employing dedicated specialists for each form of human communication.

The Future: Adaptive and Context-Aware Intelligence

Next-Generation Adaptive Systems

The next generation of MoE systems will feature even more sophisticated routing that adapts in real-time based on task complexity and context. For example, an AI assistant that automatically scales its computational effort based on task complexity. It uses simple processing for basic questions but dynamically engages specialist experts when facing complex problems.

MoE architectures represent a fundamental shift toward building AI systems that are both incredibly capable and remarkably efficient. These architectures enable researchers to create models with trillions of parameters that can run on everyday hardware, while powering the next generation of multi-sensory AI systems that can see, hear, speak, and act in the world.

The combination of intelligent expert routing, hierarchical architectures, and specialized knowledge systems promise to make sophisticated AI capabilities available to anyone with innovative ideas and modest computing resources. This democratization of AI power, coupled with unprecedented efficiency, sets the stage for innovations we can barely envision today.

The era of MoE represents more than just making AI better, it involves making advanced AI accessible to everyone while building systems that work smarter, not harder.

References

- Zhou, Y., Lei, T., Liu, H., Du, N., Huang, Y., Zhao, V., Dai, A., Chen, Z., Le, Q., and Laudon, J., "Mixture-of-Experts with Expert Choice Routing," ArXiv, 2022, [Online]

- Dai, D., Deng, C., Zhao, C., et al., "DeepSeekMoE: Towards Ultimate Expert Specialization in Mixture-of-Experts Language Models," Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, pp. 1280-1297, 2024, [Online]

- DeepSeek-AI, "DeepSeek-V3 Technical Report," ArXiv, 2024, [Online]

- Yang, Y., Zhang, S., and Chen, L., "Union of Experts: Adapting Hierarchical Routing to Equivalently Decomposed Transformer," ArXiv, 2025, [Online]

- "Uni3D-MoE: Scalable Multimodal 3D Scene Understanding via Mixture of Experts," ArXiv, 2025, [Online]

- "ChatVLA: Unified Multimodal Understanding and Robot Control with Vision-Language-Action Model," ArXiv, 2025, [Online]

- Prasad, A., Liu, Y., and Thompson, R., "MoVEInt: Mixture of Variational Experts for Learning Human–Robot Interactions from Demonstrations," ArXiv, 2024, [Online]

Why Challenges Supercharge Smarts for Humans and AI

Discover the fascinating parallels between how human brains adapt under pressure and how AI systems improve through competition, revealing universal principles of intelligent growth.

The AI That Rewrites Itself: MIT's Breakthrough in Self-Adapting Language Models

Discover how MIT researchers created SEAL, an AI framework that generates its own training data and adapts autonomously, marking a revolutionary step toward truly intelligent machines.

Discuss This with Our AI Experts

Have questions about implementing these insights? Schedule a consultation to explore how this applies to your business.