While humans can learn throughout their lives without forgetting previous knowledge, artificial neural networks struggle with what scientists call the "stability-plasticity dilemma." This fundamental challenge has been a significant barrier to developing truly adaptive AI systems capable of lifelong learning.

The Stability-Plasticity Dilemma Explained

When artificial neural networks learn new information, they often rapidly lose previously acquired knowledge, a phenomenon known as catastrophic forgetting. Unlike humans who exhibit graceful forgetting, this process is "catastrophic" in its severity and rapidity, with networks sometimes completely losing performance on previously mastered tasks after even brief exposure to new data[1].

The biological inspiration for solutions comes from the mammalian brain, which avoids catastrophic forgetting through specialized mechanisms that protect previously acquired knowledge. When mice learn new skills, specific excitatory synapses are strengthened, manifesting as increased volume in dendritic spines that persist despite subsequent learning of other tasks[1].

Promising Approaches to the Dilemma

Researchers have developed several promising approaches to address this challenge:

Spiking Neural Networks

Evidence suggests that spiking neurons provide inherent stability advantages over traditional artificial neural networks. When combined with appropriate plasticity mechanisms, Spiking Neural Networks (SNNs) demonstrate improved resilience against catastrophic forgetting[2].

Context Gating

Context-gated Spiking Neural Networks (CG-SNN) integrate local and global plasticity mechanisms to strengthen connections between task neurons and hidden neurons, preserving multi-task relevant information and demonstrating better task-selectivity during lifelong learning[3].

Hybrid Neural-Symbolic Architectures

A particularly promising approach is the Neuro-Symbolic Brain-Inspired Continual Learning (NeSyBiCL) framework. This architecture incorporates two complementary subsystems:

- A neural network model that quickly adapts to the most recent task

- A symbolic reasoner dedicated to retaining previously acquired knowledge[4]

This integration mechanism facilitates knowledge transfer between components, allowing the symbolic reasoner to inject additional supervision into the neural model. The symbolic component breaks tasks into meaningful subconcepts and solves them through logical deductions. While it may not be as accurate as the neural network for the most recent task, it demonstrates significant immunity to catastrophic forgetting[4].

"We believe that the main missing ingredient in AI so far is cognitive synergy: the fitting-together of different intelligent components into an appropriate cognitive architecture, in such a way that the components richly and dynamically support and assist each other, interrelating very closely in a similar manner to the components of the brain or body and thus giving rise to appropriate emergent structures and dynamics." — Ben Goertzel, CogPrime Overview

Advanced Memory Mechanisms

3D Spatial MultiModal Memory

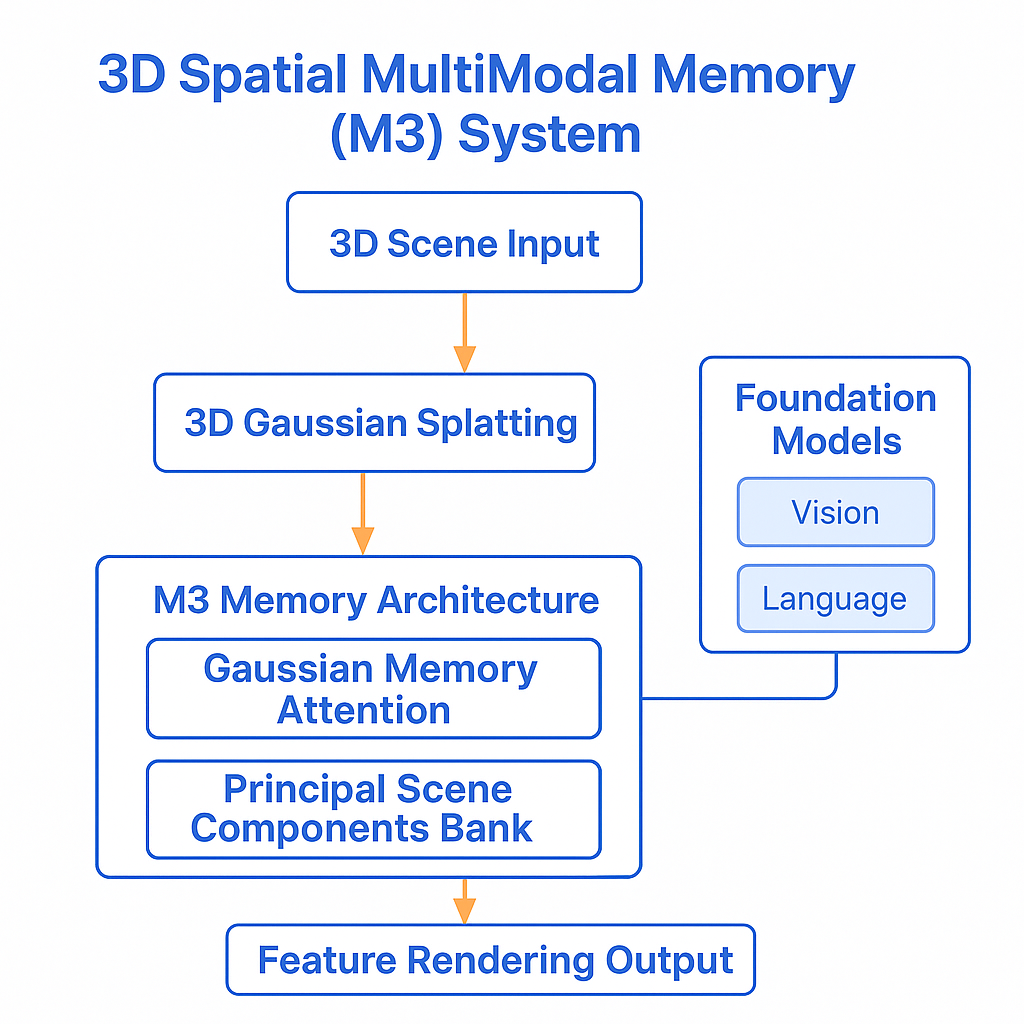

The 3D Spatial MultiModal Memory (M3) integrates 3D Gaussian Splatting techniques with foundation models to build a multimodal memory capable of rendering feature representations across granularities. Instead of directly storing high-dimensional features in Gaussian primitives, M3 stores them in a memory bank called "principal scene components" and uses low-dimensional "principal queries" as indices[5].

The Road Ahead

The integration of neural and symbolic approaches, multimodal representation learning, and advanced memory mechanisms represents significant progress toward addressing the stability-plasticity dilemma. Biologically-inspired mechanisms such as selective synaptic consolidation, context gating, and neuronal memory allocation provide valuable insights for developing artificial systems with improved cognitive flexibility.

As these technologies continue to advance, we can anticipate AI systems with increasingly human-like abilities to continuously learn and adapt while maintaining previously acquired knowledge, opening new possibilities across numerous domains, from personalized education and healthcare to more natural human-computer interaction and adaptive autonomous systems.

References

- Kirkpatrick, J. et al., "Overcoming catastrophic forgetting in neural networks," Proceedings of the National Academy of Sciences, 2017, [Online]

- Schmidgall, S., "Spiking neurons as a solution to instability in plastic neural networks," ArXiv, 2021, [Online]

- Shen, J. et al., "Context Gating in Spiking Neural Networks: Achieving Lifelong Learning through Integration of Local and Global Plasticity," ArXiv, 2024, [Online]

- Banayeeanzade, A., "Hybrid Learners Do Not Forget: A Brain-Inspired Neuro-Symbolic Approach to Continual Learning," ArXiv, 2025, [Online]

- Zou, X. et al., "M3: 3D-Spatial MultiModal Memory," ArXiv, 2025, [Online]

Discuss This with Our AI Experts

Have questions about implementing these insights? Schedule a consultation to explore how this applies to your business.